What is Docker?

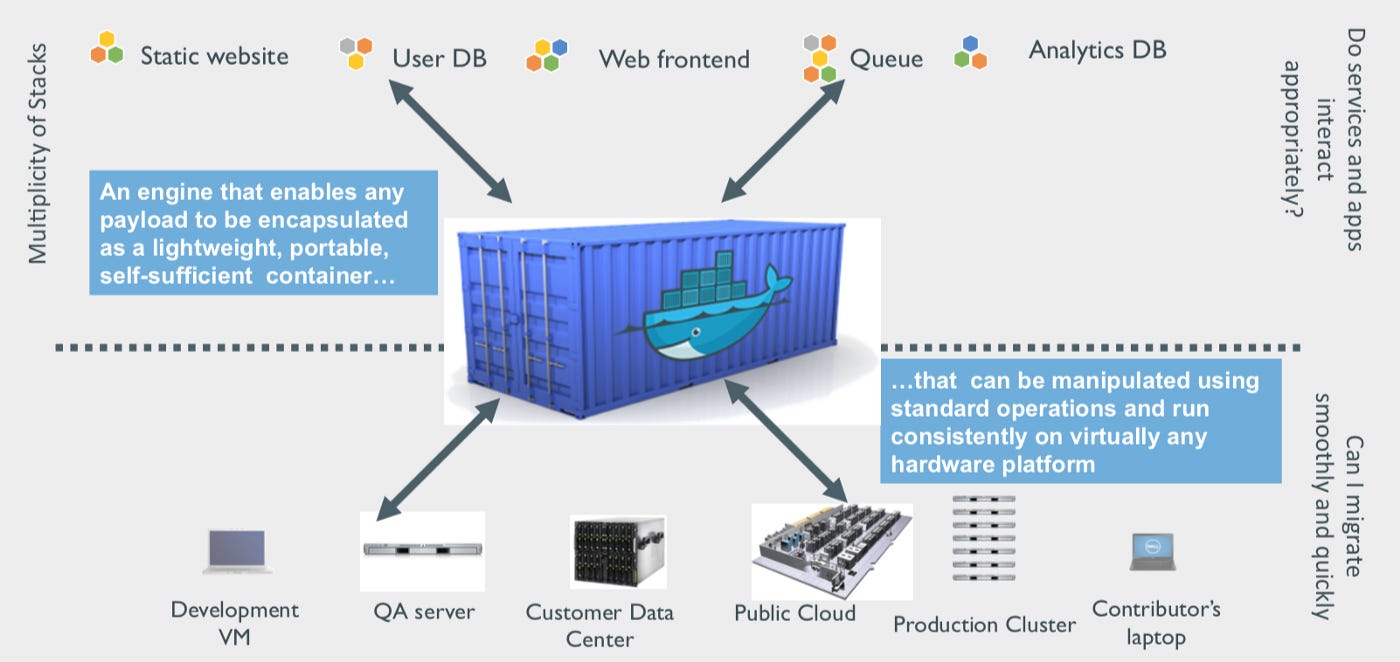

Docker is like a magic box for making, testing, and sending out apps super fast. Developers list everything their app needs in a special file called a Dockerfile. Then, Docker uses that file to create containers, which are like self-contained packages for the app. This means your app can work anywhere, no matter the setup.

Why use Docker?

Docker speeds up sending out your code and lets you manage your apps better. You can put apps in containers, which makes deploying, scaling, and fixing problems easier. It saves money by using resources well. Apps made with Docker can smoothly go from your computer to being live online. And you can use Docker for lots of things like splitting up big apps, handling data, sending code often, and using containers as a service.

How does it work?

Containerization vs Virtualization

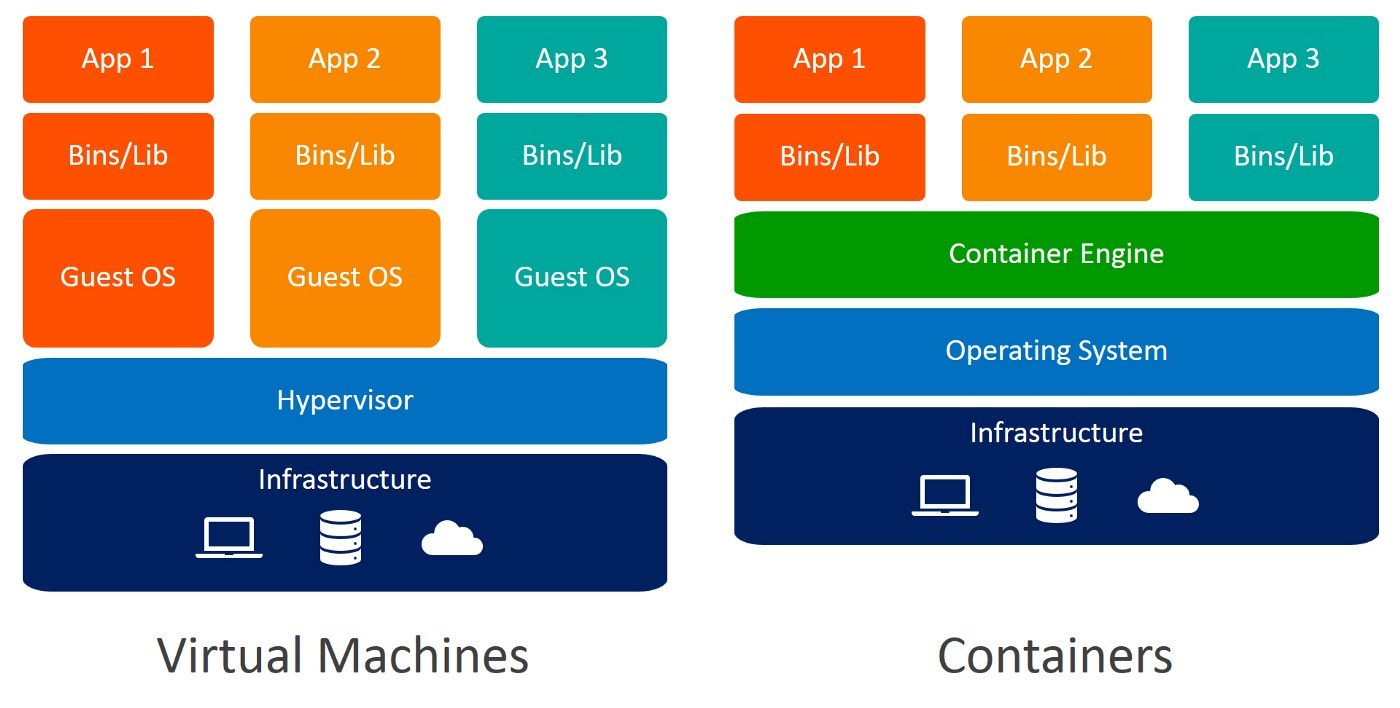

Running an application on a virtual machine (VM) means needing a separate operating system (OS) for each VM, managed by a hypervisor. This hypervisor creates and handles multiple VMs on one physical machine. Each VM acts like it's on its own computer, with its own OS and space.

In contrast, containerization is a more efficient way to deploy apps. A container wraps up an app with its own environment, making it easy to run on any machine without extra setup. Unlike VMs that need a full OS, containers share the host OS, which saves resources.

Think of VMs as creating full virtual computers, while containers create lightweight, self-contained environments for apps. So, containerization is like virtualization but lighter and focused on the OS level.

Docker Architecture

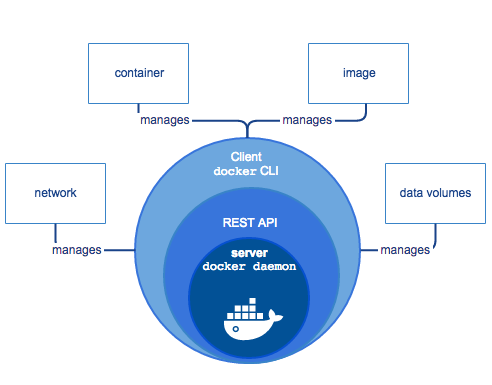

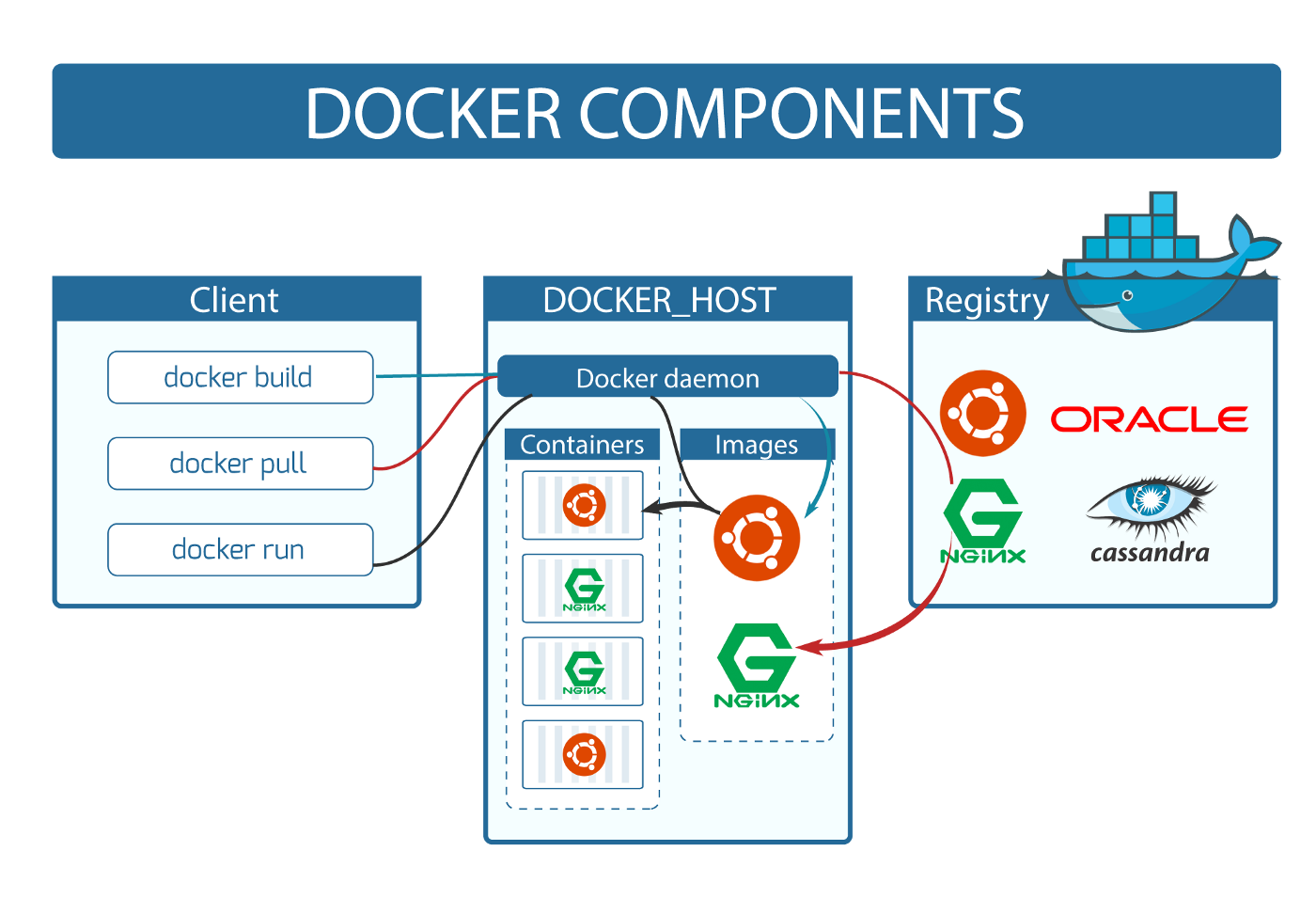

Docker works like a team with two main players: the client and the daemon. The Docker client is like the manager, giving commands, while the daemon is the hard worker, handling the actual tasks of creating, running, and sharing Docker containers. They communicate back and forth to get things done efficiently.

For download and installation refer: https://docs.docker.com

Docker daemon

The Docker daemon is like a vigilant listener, tuned in to the requests sent through the Docker client. It's responsible for managing various Docker elements like images, containers, networks, and volumes, ensuring everything runs smoothly and efficiently.

Docker client

This is what you use to interact with Docker. When you run a command using docker, the client sends the command to the daemon, which carries them out. The Docker client can communicate with more than one daemon.

Docker registries

Docker images find their home in a place called Docker Hub, which is like a big library open to everyone. When you grab an image, Docker automatically checks Docker Hub first and saves it on your computer, usually in a spot called DOCKER_HOST. You can also stash images locally or share them back to Docker Hub for others to use.

Dockerfile

To create a Docker image, follow these steps, likened to crafting a recipe:

Create a Dockerfile: Think of this as your recipe card. In a file named 'Dockerfile', you'll define all the ingredients and steps necessary to build your Docker image.

Building the Image: By default, Docker looks for a file named 'Dockerfile' in the current directory. Use the

docker buildcommand to build the image, specifying a tag with-toption. For example:

$ docker build -t myimage:1.0 .

Executing Commands: During the image building process, Docker executes the commands specified in the

RUNsection of the Dockerfile. These commands install dependencies, configure settings, or perform any other necessary tasks.Creating a Container: After the image is built, you can run it to create a container using the

docker runcommand, specifying the image ID. For example:

$ docker run ImageID

- Running Commands Inside the Container: The commands specified in the

CMDsection of the Dockerfile will be executed when you create a container from the image. These commands define what the container should do by default. For instance, if your application needs to start a web server, you would specify that command here.

By following these steps, you'll have created a Docker image, which can be stored locally or pushed to Docker Hub for online storage. When you run this image, Docker creates a container with your application and all its dependencies, making it portable and easily deployable in any environment.

Dockerfile Example

FROM ubuntu

MAINTAINER krishna porje <porjekrishna@gmail.com>

RUN apt-get update

CMD [“echo”, “Hello World”]

Docker Image

A Docker Image serves as a blueprint for creating Docker Containers, much like a snapshot of a virtual machine (VM). When you move a container from one Docker environment to another with the same operating system, it works seamlessly because the image contains all the necessary dependencies to run the code. Running a Docker Image generates a Docker Container.

Key points about Docker Images:

Snapshot of Container: Think of a Docker Image as a frozen state of a Docker Container.

Portability: Containers created from the same Docker Image can run consistently across different environments.

Immutable: Once built, Docker Images remain unchanged. They're like snapshots frozen in time.

Dependency Inclusion: Docker Images encapsulate all dependencies required to execute the code.

Storage: Docker Images can be stored locally or remotely on platforms like hub.docker.com.

In essence, Docker Images streamline the process of deploying applications by bundling everything needed to run the code into a single, portable package.

Basic Commands

$ docker pull ubuntu:18.04 (18.04 is tag/version (explained below))

$ docker images (Lists Docker Images)

$ docker run image (creates a container out of an image)

$ docker rmi image (deletes a Docker Image if no container is using it)

$ docker rmi $(docker images -q) (deletes all Docker images)

Docker can build images automatically by reading instructions from a DockerFile. A single image can be used to create multiple containers.

Docker Images are constructed in layers, with each layer representing an immutable file containing a collection of files and directories. The final layer allows data to be written out. Each layer is assigned a unique identifier, calculated using a SHA-256 hash of its contents. Consequently, if the contents of a layer change, the SHA-256 hash changes as well, resulting in a different identifier.

In Docker commands like 'docker images', the Image ID displayed is actually the first 12 characters of the hash representing the image. These hash values are often referenced by 'tag' names for easier identification and management.

This layering mechanism enables Docker to efficiently manage changes, dependencies, and versioning of images, enhancing flexibility and reliability in container-based application development and deployment.

Listing Hash Values of Docker Images

$ docker images -q --no-trunc

sha256:3556258649b2ef23a41812be17377d32f568ed9f45150a26466d2ea26d926c32

sha256:9f38484d220fa527b1fb19747638497179500a1bed8bf0498eb788229229e6e1

sha256:fce289e99eb9bca977dae136fbe2a82b6b7d4c372474c9235adc1741675f587e

- Notice the IMAGE ID below and the Hash Values given above, the first 12 characters of the hash are equal to the IMAGE ID

$ docker images

REPOSITORY TAG IMAGE ID

ubuntu 18.04 3556258649b2

centos latest 9f38484d220f

hello-world latest fce289e99eb9

The format of a full tag name is: [REGISTRYHOST/][USERNAME/]NAME[:TAG] For Registry Host ‘registry.hub.docker.com’ is inferred. For ‘:TAG’ — ‘latest’ is default, and inferred Example: registry.hub.docker.com/mongo:latest

Containers

A container is essentially a live copy of an image, where your application runs. You have full control over containers using either the Docker API or Command-Line Interface (CLI). Containers can be connected to networks, have storage attached, or even serve as a basis for creating new images reflecting their current state. However, it's crucial to note that if a container is deleted, any data stored within it is lost. This is because, upon restarting, the last layer is recreated as a new layer.

This behavior is useful during development when you don't want to retain data between tests. To maintain persistence, you should use volumes, which provide a means to store data outside the container, ensuring it persists even if the container is deleted or restarted.

$ docker inspect ubuntu:18.04

“RootFS”: {

“Type”: “layers”,

“Layers”: [

“sha256:543791078bdb84740cb5457abbea10d96dac3dea8c07d6dc173f734c20c144fe”,

“sha256:c56e09e1bd18e5e41afb1fd16f5a211f533277bdae6d5d8ae96a248214d66baf”,

“sha256:a31dbd3063d77def5b2562dc8e14ed3f578f1f90a89670ae620fd62ae7cd6ee7”,

“sha256:b079b3fa8d1b4b30a71a6e81763ed3da1327abaf0680ed3ed9f00ad1d5de5e7c”

]

},

Basic Commands

$ docker ps (list all containers)

$ docker run ImageName/ID (checks locally for image, if not available it will go to registry and then go to DOCKER_HOST)

$ docker start ContainerName/ID

$ docker kill ContainerName/ID (Stops a running container)

$ docker rm ContainerName/ID (Deletes a stopped container)

$ docker rm $(docker ps -a -q) (Delete all stopped containers)

Recap with Demo:

- Pulling an image from Docker registry

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

$ docker pull ubuntu:18.04

18.04: Pulling from library/ubuntu

7413c47ba209: Pull complete

0fe7e7cbb2e8: Pull complete

1d425c982345: Pull complete

344da5c95cec: Pull complete

Digest:sha256:c303f19cfe9ee92badbbbd7567bc1ca47789f79303ddcef56f77687d4744cd7a

Status: Downloaded newer image for ubuntu:18.04

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

ubuntu 18.04 3556258649b2 9 days ago 64.2MB

- Running image to create container (-it stands for interactive mode)

$ docker run -it ubuntu:18.04

root@4183618bcf17:/# ls

bin boot dev etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

root@4183618bcf17:/# exit

exit

- Listing Containers

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS

4183618bcf17 ubuntu:18.04 “/bin/bash” 4 minutes ago Exited